from monai.networks.nets import SEResNet50

from bioMONAI.data import BioDataLoaders

from pathlib import Path

import numpy as npCore

Imports

This section includes essential imports used throughout the core library, providing foundational tools for data handling, model training, and evaluation. Key imports cover areas such as data blocks, data loaders, custom loss functions, optimizers, callbacks, and logging.

DataBlock

DataBlock (blocks:list=None, dl_type:TfmdDL=None, getters:list=None, n_inp:int=None, item_tfms:list=None, batch_tfms:list=None, get_items=None, splitter=None, get_y=None, get_x=None)

Generic container to quickly build Datasets and DataLoaders.

| Type | Default | Details | |

|---|---|---|---|

| blocks | list | None | One or more TransformBlocks |

| dl_type | TfmdDL | None | Task specific TfmdDL, defaults to block’s dl_type orTfmdDL |

| getters | list | None | Getter functions applied to results of get_items |

| n_inp | int | None | Number of inputs |

| item_tfms | list | None | ItemTransforms, applied on an item |

| batch_tfms | list | None | Transforms or RandTransforms, applied by batch |

| get_items | NoneType | None | |

| splitter | NoneType | None | |

| get_y | NoneType | None | |

| get_x | NoneType | None |

The DataBlock class Datablock comes from the fastai library and builds datasets and dataloaders from blocks, acting as a container for creating data processing pipelines, allowing easy customization of datasets and data loaders. It enables the definition of item transformations, batch transformations, and dataset split methods, streamlining data preprocessing and loading across various stages of model training.

DataLoaders

DataLoaders (*loaders, path:str|pathlib.Path='.', device=None)

Basic wrapper around several DataLoaders.

The DataLoaders class is a container for managing training and validation datasets. This class wraps one or more DataLoader instances, ensuring seamless data management and transfer across devices (CPU or GPU) for efficient training and evaluation.

Learner

Group together a model, some dls and a loss_func to handle training

The Learner class is the main interface for training machine learning models, encapsulating the model, data, loss function, optimizer, and training metrics. It simplifies the training process by providing built-in functionality for model evaluation, hyperparameter tuning, and training loop customization, allowing you to focus on model optimization.

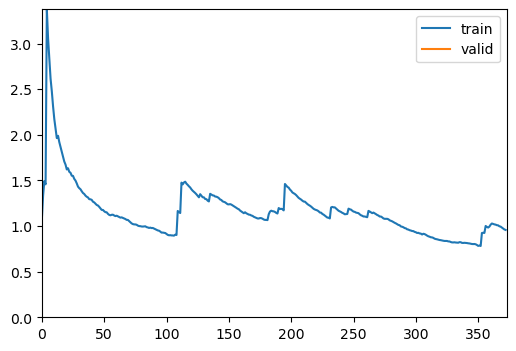

ShowGraphCallback

ShowGraphCallback (after_create=None, before_fit=None, before_epoch=None, before_train=None, before_batch=None, after_pred=None, after_loss=None, before_backward=None, after_cancel_backward=None, after_backward=None, before_step=None, after_cancel_step=None, after_step=None, after_cancel_batch=None, after_batch=None, after_cancel_train=None, after_train=None, before_validate=None, after_cancel_validate=None, after_validate=None, after_cancel_epoch=None, after_epoch=None, after_cancel_fit=None, after_fit=None)

Update a graph of training and validation loss

The ShowGraphCallback is a convenient callback for visualizing training progress. By plotting the training and validation loss, it helps users monitor convergence and performance, making it easy to assess if the model requires adjustments in learning rate, architecture, or data handling.

CSVLogger

CSVLogger (fname='history.csv', append=False)

Log the results displayed in learn.path/fname

The CSVLogger is a tool for logging model training metrics to a CSV file, offering a permanent record of training history. This feature is especially useful for long-term experiments and fine-tuning, allowing you to track and analyze model performance over time.

cells3d

cells3d ()

3D fluorescence microscopy image of cells.

The returned data is a 3D multichannel array with dimensions provided in (z, c, y, x) order. Each voxel has a size of (0.29 0.26 0.26) micrometer. Channel 0 contains cell membranes, channel 1 contains nuclei.

The cells3d function returns a sample 3D fluorescence microscopy image. This is a valuable test image for demonstration and analysis, consisting of both cell membrane and nucleus channels. It can serve as a default dataset for evaluating and benchmarking new models and transformations.

The dataset has the following dimensions: (60,2,256,256), which are in a (z,c,y,x) order: - z: 60 slices in the image - c: 2 channels where channel 0 represents the cell membrane fluorescence, and channel 1 represents the nuclei fluorescence.

- y and x, which correspond to the dimensions of each slice

Engine

The engine module provides advanced functionalities for model training, including configurable training loops and evaluation functions tailored for bioinformatics applications. This module is significantly valuable when there is a need for specific workflows and pipelines that meet specific requirements. For this reason, the classes fastTrainer and visionTrainer have been created, providing tailored implementations inheriting from the Learner class.

read_yaml

read_yaml (yaml_path)

Reads a YAML file and returns its contents as a dictionary

dictlist_to_funclist

dictlist_to_funclist (transform_dicts)

FastTrainer is used for training models in bioinformatics applications, where specific loss functions and optimizers oriented to biological data can be used.

fastTrainer

fastTrainer (dataloaders:fastai.data.core.DataLoaders, model:<built- infunctioncallable>, loss_fn:typing.Any|None=None, optimizer :fastai.optimizer.Optimizer|fastai.optimizer.OptimWrapper=<f unction Adam>, lr:float|slice=0.001, splitter:<built- infunctioncallable>=<function trainable_params>, callbacks:f astai.callback.core.Callback|collections.abc.MutableSequence |None=None, metrics:typing.Any|collections.abc.MutableSequen ce|None=None, csv_log:bool=False, show_graph:bool=True, show_summary:bool=False, find_lr:bool=False, find_lr_fn=<function valley>, path:str|pathlib.Path|None=None, model_dir:str|pathlib.Path='models', wd:float|int|None=None, wd_bn_bias:bool=False, train_bn:bool=True, moms:tuple=(0.95, 0.85, 0.95), default_cbs:bool=True)

A custom implementation of the FastAI Learner class for training models in bioinformatics applications.

| Type | Default | Details | |

|---|---|---|---|

| dataloaders | DataLoaders | The DataLoader objects containing training and validation datasets. | |

| model | callable | A callable model that will be trained on the dataset. | |

| loss_fn | typing.Any | None | None | The loss function to optimize during training. If None, defaults to a suitable default. |

| optimizer | fastai.optimizer.Optimizer | fastai.optimizer.OptimWrapper | Adam | The optimizer function to use. Defaults to Adam if not specified. |

| lr | float | slice | 0.001 | Learning rate for the optimizer. Can be a float or a slice object for learning rate scheduling. |

| splitter | callable | trainable_params | |

| callbacks | fastai.callback.core.Callback | collections.abc.MutableSequence | None | None | A callable that determines which parameters of the model should be updated during training. |

| metrics | typing.Any | collections.abc.MutableSequence | None | None | Optional list of callback functions to customize training behavior. |

| csv_log | bool | False | Metrics to evaluate the performance of the model during training. |

| show_graph | bool | True | Whether to log training history to a CSV file. If True, logs will be appended to ‘history.csv’. |

| show_summary | bool | False | The base directory where models are saved or loaded from. Defaults to None. |

| find_lr | bool | False | Subdirectory within the base path where trained models are stored. Default is ‘models’. |

| find_lr_fn | function | valley | Weight decay factor for optimization. Defaults to None. |

| path | str | pathlib.Path | None | None | Whether to apply weight decay to batch normalization and bias parameters. |

| model_dir | str | pathlib.Path | models | Whether to update the batch normalization statistics during training. |

| wd | float | int | None | None | |

| wd_bn_bias | bool | False | |

| train_bn | bool | True | |

| moms | tuple | (0.95, 0.85, 0.95) | Tuple of tuples representing the momentum values for different layers in the model. Defaults to FastAI’s default settings if not specified. |

| default_cbs | bool | True | Automatically include default callbacks such as ShowGraphCallback and CSVLogger. |

Example: train a model with configuration from a YAML file.

# Import the data

image_path = '_data'

info = download_medmnist('bloodmnist', image_path, download_only=True)

batch_size = 32

path = Path(image_path)/'bloodmnist'

path_train = path/'train'

path_val = path/'val'Downloading https://zenodo.org/records/10519652/files/bloodmnist.npz?download=1 to _data/bloodmnist/bloodmnist.npz100%|██████████| 35461855/35461855 [00:09<00:00, 3590813.84it/s]Using downloaded and verified file: _data/bloodmnist/bloodmnist.npz

Using downloaded and verified file: _data/bloodmnist/bloodmnist.npz

Saving training images to _data/bloodmnist...100%|██████████| 11959/11959 [00:02<00:00, 4998.88it/s]Saving validation images to _data/bloodmnist...100%|██████████| 1712/1712 [00:00<00:00, 5081.19it/s]Saving test images to _data/bloodmnist...100%|██████████| 3421/3421 [00:00<00:00, 5037.19it/s]Removed bloodmnist.npz

Datasets downloaded to _data/bloodmnist

Dataset info for 'bloodmnist': {'python_class': 'BloodMNIST', 'description': 'The BloodMNIST is based on a dataset of individual normal cells, captured from individuals without infection, hematologic or oncologic disease and free of any pharmacologic treatment at the moment of blood collection. It contains a total of 17,092 images and is organized into 8 classes. We split the source dataset with a ratio of 7:1:2 into training, validation and test set. The source images with resolution 3×360×363 pixels are center-cropped into 3×200×200, and then resized into 3×28×28.', 'url': 'https://zenodo.org/records/10519652/files/bloodmnist.npz?download=1', 'MD5': '7053d0359d879ad8a5505303e11de1dc', 'url_64': 'https://zenodo.org/records/10519652/files/bloodmnist_64.npz?download=1', 'MD5_64': '2b94928a2ae4916078ca51e05b6b800b', 'url_128': 'https://zenodo.org/records/10519652/files/bloodmnist_128.npz?download=1', 'MD5_128': 'adace1e0ed228fccda1f39692059dd4c', 'url_224': 'https://zenodo.org/records/10519652/files/bloodmnist_224.npz?download=1', 'MD5_224': 'b718ff6835fcbdb22ba9eacccd7b2601', 'task': 'multi-class', 'label': {'0': 'basophil', '1': 'eosinophil', '2': 'erythroblast', '3': 'immature granulocytes(myelocytes, metamyelocytes and promyelocytes)', '4': 'lymphocyte', '5': 'monocyte', '6': 'neutrophil', '7': 'platelet'}, 'n_channels': 3, 'n_samples': {'train': 11959, 'val': 1712, 'test': 3421}, 'license': 'CC BY 4.0'}# Define the dataloader

data = BioDataLoaders.class_from_folder(

path,

train='train',

valid='val',

vocab=info['label'],

batch_tfms=None,

bs=batch_size)

# Define the model

model = SEResNet50(spatial_dims=2,

in_channels=3,

num_classes=8)# Define the trainer with configuration from a YAML file

yaml_path = "./data_examples/sample_config.yml"

trainer = fastTrainer.from_yaml(data, model, yaml_path)

# Train the model

trainer.fit(1)| epoch | train_loss | valid_loss | accuracy | balanced_accuracy_score | precision_score | time |

|---|---|---|---|---|---|---|

| 0 | 0.957569 | 0.669474 | 0.755841 | 0.682645 | 0.767054 | 00:12 |

Better model found at epoch 0 with accuracy value: 0.7558411359786987.

VisionTrainer is used for computer vision applications, where image normalization or other computer vision related settings are needed.

visionTrainer

visionTrainer (dataloaders:fastai.data.core.DataLoaders, model:<built- infunctioncallable>, normalize=True, n_out=None, pretrained=True, weights=None, loss_fn:typing.Any|None=None, optimizer:fastai.optimizer.O ptimizer|fastai.optimizer.OptimWrapper=<function Adam>, lr:float|slice=0.001, splitter:<built- infunctioncallable>=<function trainable_params>, callbacks :fastai.callback.core.Callback|collections.abc.MutableSequ ence|None=None, metrics:typing.Any|collections.abc.Mutable Sequence|None=None, csv_log:bool=False, show_graph:bool=True, show_summary:bool=False, find_lr:bool=False, find_lr_fn=<function valley>, path:str|pathlib.Path|None=None, model_dir:str|pathlib.Path='models', wd:float|int|None=None, wd_bn_bias:bool=False, train_bn:bool=True, moms:tuple=(0.95, 0.85, 0.95), default_cbs:bool=True, cut=None, init=<function kaiming_normal_>, custom_head=None, concat_pool=True, pool=True, lin_ftrs=None, ps=0.5, first_bn=True, bn_final=False, lin_first=False, y_range=None, n_in=3)

Build a vision trainer from dataloaders and model

| Type | Default | Details | |

|---|---|---|---|

| dataloaders | DataLoaders | The DataLoader objects containing training and validation datasets. | |

| model | callable | A callable model that will be trained on the dataset. | |

| normalize | bool | True | |

| n_out | NoneType | None | |

| pretrained | bool | True | |

| weights | NoneType | None | |

| loss_fn | typing.Any | None | None | The loss function to optimize during training. If None, defaults to a suitable default. |

| optimizer | fastai.optimizer.Optimizer | fastai.optimizer.OptimWrapper | Adam | The optimizer function to use. Defaults to Adam if not specified. |

| lr | float | slice | 0.001 | Learning rate for the optimizer. Can be a float or a slice object for learning rate scheduling. |

| splitter | callable | trainable_params | |

| callbacks | fastai.callback.core.Callback | collections.abc.MutableSequence | None | None | A callable that determines which parameters of the model should be updated during training. |

| metrics | typing.Any | collections.abc.MutableSequence | None | None | Optional list of callback functions to customize training behavior. |

| csv_log | bool | False | Metrics to evaluate the performance of the model during training. |

| show_graph | bool | True | Whether to log training history to a CSV file. If True, logs will be appended to ‘history.csv’. |

| show_summary | bool | False | The base directory where models are saved or loaded from. Defaults to None. |

| find_lr | bool | False | Subdirectory within the base path where trained models are stored. Default is ‘models’. |

| find_lr_fn | function | valley | Weight decay factor for optimization. Defaults to None. |

| path | str | pathlib.Path | None | None | Whether to apply weight decay to batch normalization and bias parameters. |

| model_dir | str | pathlib.Path | models | Whether to update the batch normalization statistics during training. |

| wd | float | int | None | None | |

| wd_bn_bias | bool | False | |

| train_bn | bool | True | |

| moms | tuple | (0.95, 0.85, 0.95) | Tuple of tuples representing the momentum values for different layers in the model. Defaults to FastAI’s default settings if not specified. |

| default_cbs | bool | True | Automatically include default callbacks such as ShowGraphCallback and CSVLogger. |

| cut | NoneType | None | model & head args |

| init | function | kaiming_normal_ | |

| custom_head | NoneType | None | |

| concat_pool | bool | True | |

| pool | bool | True | |

| lin_ftrs | NoneType | None | |

| ps | float | 0.5 | |

| first_bn | bool | True | |

| bn_final | bool | False | |

| lin_first | bool | False | |

| y_range | NoneType | None | |

| n_in | int | 3 |

Evaluation

The evaluation module provides functionalities for model evaluation, with several customizations available.

display_statistics_table

display_statistics_table (stats, fn_name='', as_dataframe=True)

Display a table of the key statistics.

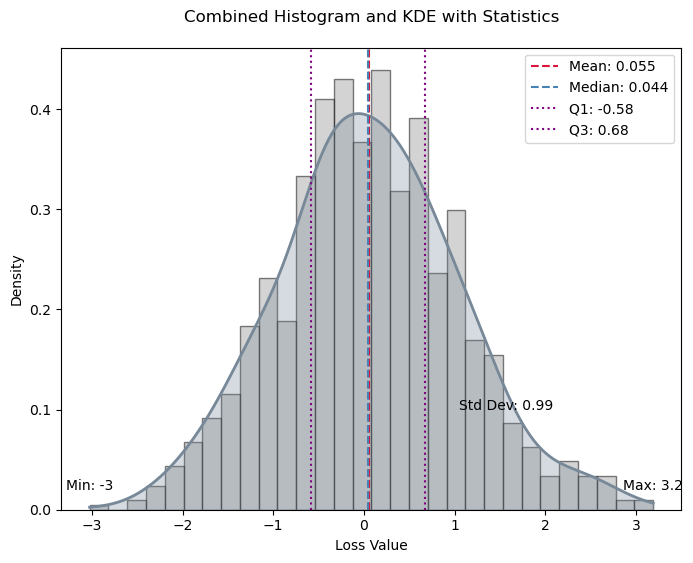

plot_histogram_and_kde

plot_histogram_and_kde (data, stats, bw_method=0.3, fn_name='')

Plot the histogram and KDE of the data with key statistics marked.

format_sig

format_sig (value)

Format numbers with two significant digits.

calculate_statistics

calculate_statistics (data)

Calculate key statistics for the data.

compute_metric

compute_metric (predictions, targets, metric_fn)

Compute the metric for each prediction-target pair. Handles cases where metric_fn has or does not have a ‘func’ attribute.

compute_losses

compute_losses (predictions, targets, loss_fn)

Compute the loss for each prediction-target pair.

from numpy.random import standard_normala = standard_normal(1000)

stats = calculate_statistics(a)

plot_histogram_and_kde(a, stats)

Evaluate_model and evaluate_classification_model are two classes created in order to integrate the evaluation process in a single computation. Evaluate_model can be used on any type of task, whereas evaluate_classification_model is specifically designed for classification tasks.

evaluate_model

evaluate_model (trainer:fastai.learner.Learner, test_data:fastai.data.core.DataLoaders=None, loss=None, metrics=None, bw_method=0.3, show_graph=True, show_table=True, show_results=True, as_dataframe=True, cmap='magma')

Calculate and optionally plot the distribution of loss values from predictions made by the trainer on test data, with an optional table of key statistics.

| Type | Default | Details | |

|---|---|---|---|

| trainer | Learner | The model trainer object with a get_preds method. | |

| test_data | DataLoaders | None | DataLoader containing test data. |

| loss | NoneType | None | Loss function to evaluate prediction-target pairs. |

| metrics | NoneType | None | Single metric or a list of metrics to evaluate. |

| bw_method | float | 0.3 | Bandwidth method for KDE. |

| show_graph | bool | True | Boolean flag to show the histogram and KDE plot. |

| show_table | bool | True | Boolean flag to show the statistics table. |

| show_results | bool | True | Boolean flag to show model results on test data. |

| as_dataframe | bool | True | Boolean flag to display table as a DataFrame. |

| cmap | str | magma | Colormap for visualization. |

evaluate_classification_model

evaluate_classification_model (trainer:fastai.learner.Learner, test_data:fastai.data.core.DataLoaders=Non e, loss_fn=None, most_confused_n:int=1, normalize:bool=True, metrics=None, bw_method=0.3, show_graph=True, show_table=True, show_results=True, as_dataframe=True, cmap=<matplotlib.colors .LinearSegmentedColormap object at 0x7fc444a9fb90>)

Evaluates a classification model by displaying results, confusion matrix, and most confused classes.

| Type | Default | Details | |

|---|---|---|---|

| trainer | Learner | The trained model (learner) to evaluate. | |

| test_data | DataLoaders | None | DataLoader with test data for evaluation. If None, the validation dataset is used. |

| loss_fn | NoneType | None | Loss function used in the model for ClassificationInterpretation. If None, the loss function is loaded from trainer. |

| most_confused_n | int | 1 | Number of most confused class pairs to display. |

| normalize | bool | True | Whether to normalize the confusion matrix. |

| metrics | NoneType | None | Single metric or a list of metrics to evaluate. |

| bw_method | float | 0.3 | Bandwidth method for KDE. |

| show_graph | bool | True | Boolean flag to show the histogram and KDE plot. |

| show_table | bool | True | Boolean flag to show the statistics table. |

| show_results | bool | True | Boolean flag to show model results on test data. |

| as_dataframe | bool | True | Boolean flag to display table as a DataFrame. |

| cmap | LinearSegmentedColormap | <matplotlib.colors.LinearSegmentedColormap object at 0x7fc444a9fb90> | Color map for the confusion matrix plot. |

Utils

The utils module contains helper functions and classes to facilitate data manipulation, model setup, and training. These utilities add flexibility and convenience, supporting rapid experimentation and efficient data handling.

attributesFromDict

attributesFromDict (d)

The attributesFromDict function simplifies the conversion of dictionary keys and values into object attributes, allowing dynamic attribute creation for configuration objects. This utility is handy for initializing model or dataset configurations directly from dictionaries, improving code readability and maintainability.

get_device

get_device ()

The get_device function is used to detect if the device the code is executed in has got a CUDA-enabled GPU available. If it doesn’t, it returns CPU.

img2float

img2float (image, force_copy=False)

The img2float function turns an image into float representation.

img2Tensor

img2Tensor (image)

The img2Tensor function turns an image into tensor representation after turning it first into float representation.

apply_transforms

apply_transforms (image, transforms)

Apply a list of transformations, ensuring at least one is applied.

| Details | |

|---|---|

| image | The image to transform |

| transforms | A list of transformations to apply |

# If we pass an empty list of transforms, it should return the input unchanged

test_eq(apply_transforms([1, 2], []), [1, 2])