from bioMONAI.data import *

from bioMONAI.transforms import *

from bioMONAI.core import *

from bioMONAI.core import Path

from bioMONAI.data import get_image_files, get_target, RandomSplitter

from bioMONAI.losses import *

from bioMONAI.losses import SSIMLoss

from bioMONAI.metrics import *

from bioMONAI.datasets import download_fileDenoising

Setup imports

import warnings

warnings.filterwarnings("ignore")device = get_device()

print(device)cudaDownload Data

In the next cell, we will download the dataset required for this tutorial. The dataset is hosted online, and we will use the download_file function from the bioMONAI library to download and extract the files.

- You can change the

output_directoryvariable to specify a different directory where you want to save the downloaded files.- The

urlvariable contains the link to the dataset. If you have a different dataset, you can replace this URL with the link to your dataset.- By default, we are downloading only the first two images. You can modify the code to download more images if needed.

Make sure you have enough storage space in the specified directory before downloading the dataset.

# Specify the directory where you want to save the downloaded files

output_directory = "../_data/U2OS"

# Define the base URL for the dataset

url = 'http://csbdeep.bioimagecomputing.com/example_data/snr_7_binning_2.zip'

# Download only the first two images

download_file(url, output_directory, extract=True)Downloading data from 'http://csbdeep.bioimagecomputing.com/example_data/snr_7_binning_2.zip' to file '/home/export/personal/miguel/git_projects/bioMONAI/nbs/_data/U2OS/128a57f165e1044e34d9a6ef46e66b3c-snr_7_binning_2.zip'.

SHA256 hash of downloaded file: d5f2499874d762a8cd8cc90f34052b663e70071e280ec8af05acb8fdccb4badb

Use this value as the 'known_hash' argument of 'pooch.retrieve' to ensure that the file hasn't changed if it is downloaded again in the future.

Unzipping contents of '/home/export/personal/miguel/git_projects/bioMONAI/nbs/_data/U2OS/128a57f165e1044e34d9a6ef46e66b3c-snr_7_binning_2.zip' to '/home/export/personal/miguel/git_projects/bioMONAI/nbs/_data/U2OS/128a57f165e1044e34d9a6ef46e66b3c-snr_7_binning_2.zip.unzip'The file has been downloaded and saved to: /home/export/personal/miguel/git_projects/bioMONAI/nbs/_data/U2OSPrepare Data for Training

In the next cell, we will prepare the data for training. We will specify the path to the training images and define the batch size and patch size. Additionally, we will apply several transformations to the images to augment the dataset and improve the model’s robustness.

X_path: The path to the directory containing the low-resolution training images.bs: The batch size, which determines the number of images processed together in one iteration.patch_size: The size of the patches to be extracted from the images.itemTfms: A list of item-level transformations applied to each image, including random cropping, rotation, and flipping.batchTfms: A list of batch-level transformations applied to each batch of images, including intensity scaling.get_target_fn: A function to get the corresponding ground truth images for the low-resolution images.

You can customize the following parameters to suit your needs: - Change the

X_pathvariable to point to a different dataset. - Adjust thebsandpatch_sizevariables to match your hardware capabilities and model requirements. - Modify the transformations initemTfmsandbatchTfmsto include other augmentations or preprocessing steps.

After defining these parameters and transformations, we will create a BioDataLoaders object to load the training and validation datasets.

extract_directory = Path(output_directory)/'128a57f165e1044e34d9a6ef46e66b3c-snr_7_binning_2.zip.unzip'

X_path = extract_directory/'train'/'low'

batch_size = 32

patch_size = 96

get_target_fn = get_target('GT', same_filename=True, relative_path=True)

data_ops = {

'valid_pct': 0.05, # percentage of data for the validation set

'seed': 42, # seed for random number generator

'bs': batch_size, # batch size

'item_tfms': [RandCropND(patch_size), # item transformations

RandRot90(prob=.75),

RandFlip(prob=0.75)],

'batch_tfms': [ScaleIntensityPercentiles()], # batch transformations

}

data = BioDataLoaders.from_folder(

X_path, # input images

get_target_fn, # target images

show_summary=False, # print summary of the dataset

**data_ops, # rest of the method arguments

)

# print length of training and validation datasets

print('train images:', len(data.train_ds.items), '\nvalidation images:', len(data.valid_ds.items))train images: 2335

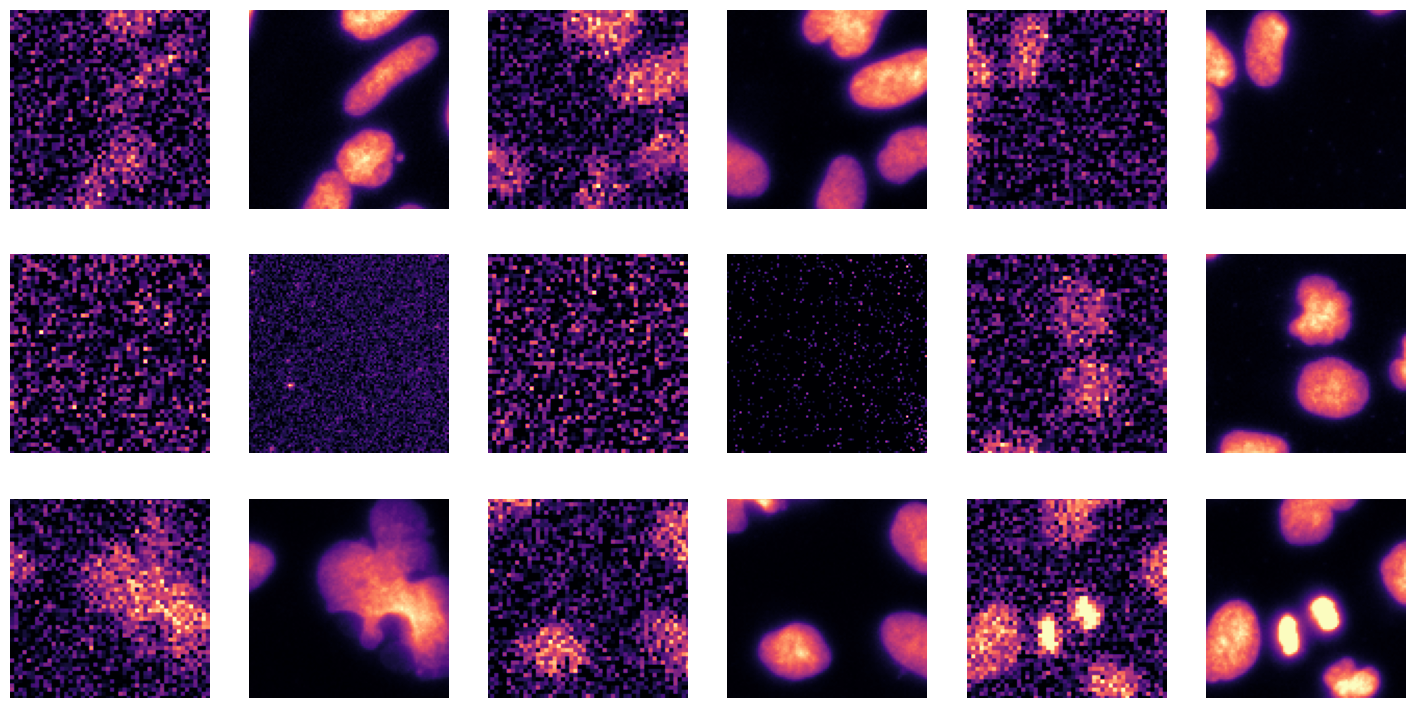

validation images: 122Visualize a Batch of Training Data

In the next cell, we will visualize a batch of training data to get an idea of what the images look like after applying the transformations. This step is crucial to ensure that the data augmentation and preprocessing steps are working as expected.

data.show_batch(cmap='magma'): This function will display a batch of images from the training dataset using the ‘magma’ colormap.

Change the

cmapparameter to use a different colormap (e.g., ‘gray’, ‘viridis’, ‘plasma’) based on your preference.

Visualizing the data helps in understanding the dataset better and ensures that the transformations are applied correctly.

data.show_batch(cmap='magma')

Visualize a Specific Image

In the next cell, we will visualize a specific image from the dataset using its index. This step is useful for inspecting individual images and verifying their quality and labels. The do_item method of the BioDataLoaders class is used to retrieve the image and its label, and the show method is used to display the image.

a=data.do_item(100)

a[0].show(cmap='magma');

Define and Train the Model

In the next cell, we will define a 2D U-Net model using the create_unet_model function from the bioMONAI library. The U-Net model is a popular architecture for image segmentation tasks, and it can be customized to suit various applications.

resnet34: The backbone of the U-Net model. You can replace this with other backbones likeresnet18,resnet50, etc., depending on your requirements.1: The number of output channels. For grayscale images, this should be set to 1. For RGB images, set it to 3.(128,128): The input size of the images. Adjust this based on the size of your input images.True: Whether to use pre-trained weights for the backbone. Set this toFalseif you want to train the model from scratch.n_in=1: The number of input channels. For grayscale images, this should be set to 1. For RGB images, set it to 3.cut=7: The layer at which to cut the backbone. Adjust this based on the architecture of the backbone.

You can customize the following parameters to suit your needs: - Change the backbone to a different architecture. - Adjust the input and output channels based on your dataset. - Modify the input size to match the dimensions of your images. - Set

pretrainedtoFalseif you want to train the model from scratch.

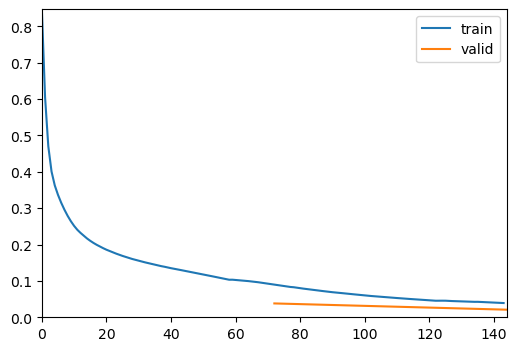

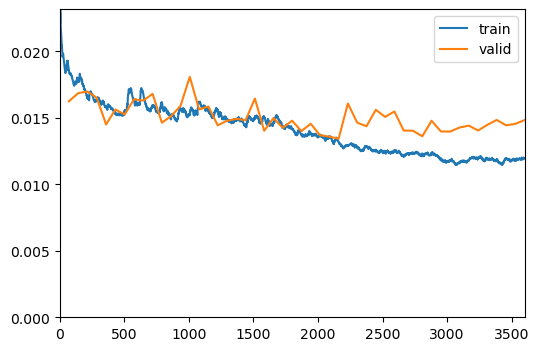

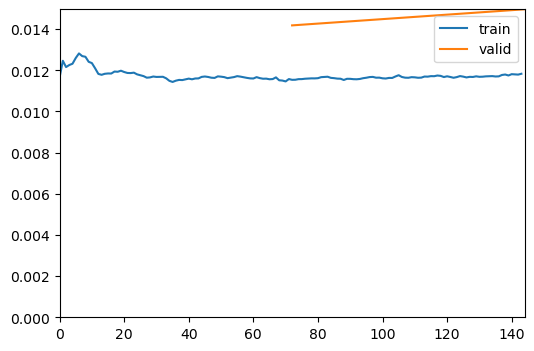

After defining the model, we will proceed to train it using the fastTrainer class. The training process involves fine-tuning the model for a specified number of epochs and evaluating its performance on the validation dataset.

from bioMONAI.nets import create_unet_model, resnet34

model = create_unet_model(resnet34, 1, (128,128), True, n_in=1, cut=7)loss = CombinedLoss(mse_weight=0.8, mae_weight=0.1)

metrics = [MSEMetric(), MAEMetric(), SSIMMetric(2)]

trainer = fastTrainer(data, model, loss_fn=loss, metrics=metrics, show_summary=False)trainer.fine_tune(50, freeze_epochs=2)| epoch | train_loss | valid_loss | MSE | MAE | SSIM | time |

|---|---|---|---|---|---|---|

| 0 | 0.091283 | 0.038087 | 0.003334 | 0.033882 | 0.679681 | 00:09 |

| 1 | 0.039144 | 0.020872 | 0.003213 | 0.029064 | 0.846050 | 00:09 |

| epoch | train_loss | valid_loss | MSE | MAE | SSIM | time |

|---|---|---|---|---|---|---|

| 0 | 0.018590 | 0.016245 | 0.001428 | 0.020205 | 0.869175 | 00:07 |

| 1 | 0.017735 | 0.016854 | 0.001767 | 0.022272 | 0.867866 | 00:08 |

| 2 | 0.016453 | 0.016980 | 0.001879 | 0.022511 | 0.867746 | 00:08 |

| 3 | 0.016318 | 0.016501 | 0.001660 | 0.021157 | 0.869425 | 00:08 |

| 4 | 0.015802 | 0.014503 | 0.001199 | 0.018310 | 0.882879 | 00:08 |

| 5 | 0.015380 | 0.015624 | 0.001231 | 0.018881 | 0.872488 | 00:08 |

| 6 | 0.015434 | 0.015256 | 0.001402 | 0.019921 | 0.878575 | 00:08 |

| 7 | 0.016333 | 0.016394 | 0.001672 | 0.021574 | 0.871009 | 00:07 |

| 8 | 0.016842 | 0.016297 | 0.001407 | 0.019500 | 0.867791 | 00:08 |

| 9 | 0.015876 | 0.016804 | 0.001347 | 0.019480 | 0.862223 | 00:08 |

| 10 | 0.016188 | 0.014634 | 0.001234 | 0.018485 | 0.882012 | 00:08 |

| 11 | 0.014898 | 0.015135 | 0.001373 | 0.018963 | 0.878599 | 00:07 |

| 12 | 0.015670 | 0.015895 | 0.001535 | 0.020535 | 0.873862 | 00:08 |

| 13 | 0.015179 | 0.018079 | 0.001370 | 0.020026 | 0.850189 | 00:07 |

| 14 | 0.016163 | 0.015631 | 0.001266 | 0.018942 | 0.872760 | 00:08 |

| 15 | 0.015571 | 0.015849 | 0.001280 | 0.019001 | 0.870751 | 00:08 |

| 16 | 0.015575 | 0.014438 | 0.001204 | 0.018123 | 0.883374 | 00:07 |

| 17 | 0.014841 | 0.014776 | 0.001362 | 0.019337 | 0.882474 | 00:08 |

| 18 | 0.014846 | 0.014924 | 0.001550 | 0.020492 | 0.883654 | 00:07 |

| 19 | 0.014523 | 0.014821 | 0.001338 | 0.018680 | 0.881175 | 00:08 |

| 20 | 0.014897 | 0.016450 | 0.001910 | 0.022266 | 0.873053 | 00:07 |

| 21 | 0.015053 | 0.014026 | 0.001173 | 0.017865 | 0.886989 | 00:08 |

| 22 | 0.014654 | 0.014989 | 0.001183 | 0.018154 | 0.877735 | 00:07 |

| 23 | 0.014243 | 0.014246 | 0.001124 | 0.017807 | 0.884340 | 00:08 |

| 24 | 0.014193 | 0.014786 | 0.001117 | 0.017774 | 0.878852 | 00:08 |

| 25 | 0.013763 | 0.014003 | 0.001241 | 0.018323 | 0.888228 | 00:07 |

| 26 | 0.013847 | 0.014553 | 0.001140 | 0.017833 | 0.881418 | 00:08 |

| 27 | 0.013748 | 0.013692 | 0.001058 | 0.017123 | 0.888672 | 00:07 |

| 28 | 0.013593 | 0.013587 | 0.001049 | 0.017127 | 0.889649 | 00:08 |

| 29 | 0.013200 | 0.013431 | 0.001047 | 0.016945 | 0.891008 | 00:07 |

| 30 | 0.012974 | 0.016077 | 0.001290 | 0.018692 | 0.868238 | 00:08 |

| 31 | 0.012834 | 0.014635 | 0.001158 | 0.017853 | 0.880768 | 00:09 |

| 32 | 0.012843 | 0.014368 | 0.001127 | 0.017666 | 0.883002 | 00:10 |

| 33 | 0.012455 | 0.015606 | 0.001213 | 0.018493 | 0.872137 | 00:07 |

| 34 | 0.012464 | 0.015070 | 0.001333 | 0.019086 | 0.879045 | 00:08 |

| 35 | 0.012562 | 0.015481 | 0.001210 | 0.018499 | 0.873372 | 00:08 |

| 36 | 0.012072 | 0.014047 | 0.001047 | 0.017079 | 0.884988 | 00:09 |

| 37 | 0.012390 | 0.014027 | 0.001038 | 0.016993 | 0.885025 | 00:08 |

| 38 | 0.012325 | 0.013614 | 0.001042 | 0.016882 | 0.889081 | 00:09 |

| 39 | 0.012342 | 0.014779 | 0.001063 | 0.017242 | 0.877952 | 00:08 |

| 40 | 0.011910 | 0.013977 | 0.001112 | 0.017367 | 0.886484 | 00:08 |

| 41 | 0.011827 | 0.013966 | 0.001037 | 0.016936 | 0.885579 | 00:08 |

| 42 | 0.011600 | 0.014270 | 0.001051 | 0.017050 | 0.882753 | 00:08 |

| 43 | 0.011793 | 0.014414 | 0.001045 | 0.017061 | 0.881285 | 00:08 |

| 44 | 0.011914 | 0.014048 | 0.001027 | 0.016870 | 0.884611 | 00:08 |

| 45 | 0.011940 | 0.014476 | 0.001088 | 0.017383 | 0.881329 | 00:08 |

| 46 | 0.011864 | 0.014843 | 0.001094 | 0.017490 | 0.877812 | 00:07 |

| 47 | 0.011933 | 0.014440 | 0.001040 | 0.017015 | 0.880933 | 00:08 |

| 48 | 0.011844 | 0.014545 | 0.001059 | 0.017142 | 0.880159 | 00:08 |

| 49 | 0.011932 | 0.014833 | 0.001062 | 0.017238 | 0.877410 | 00:08 |

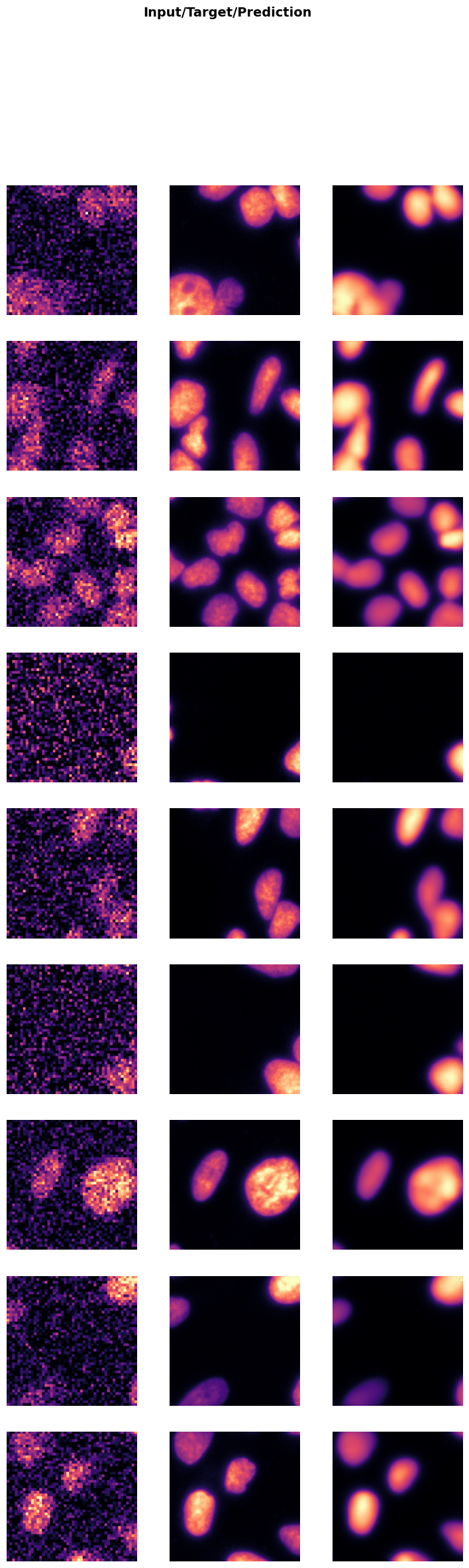

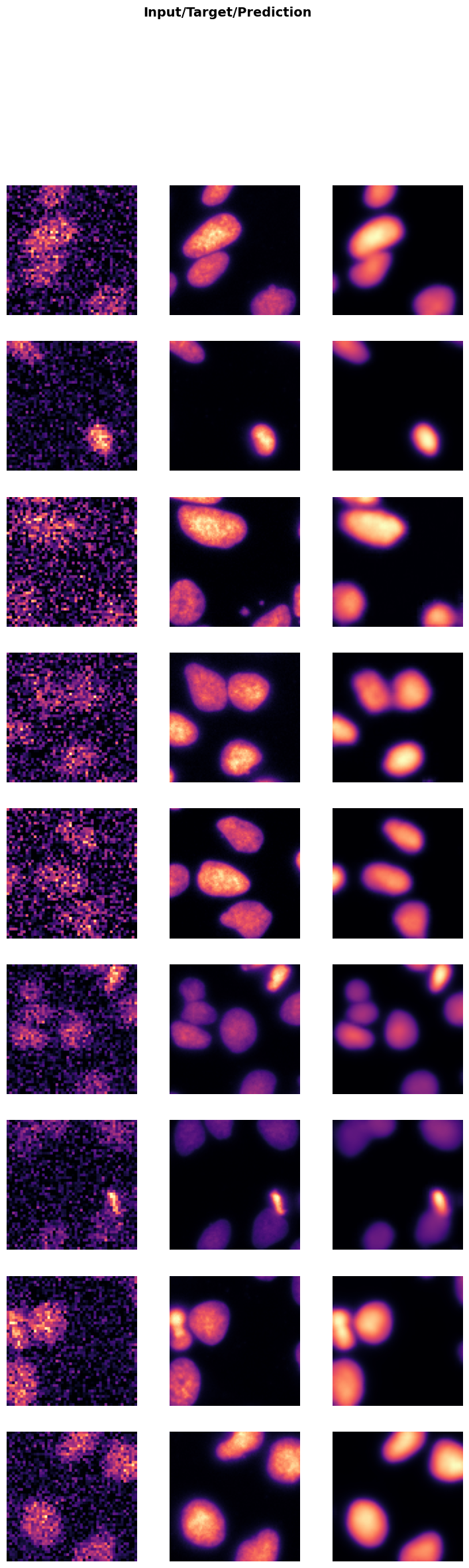

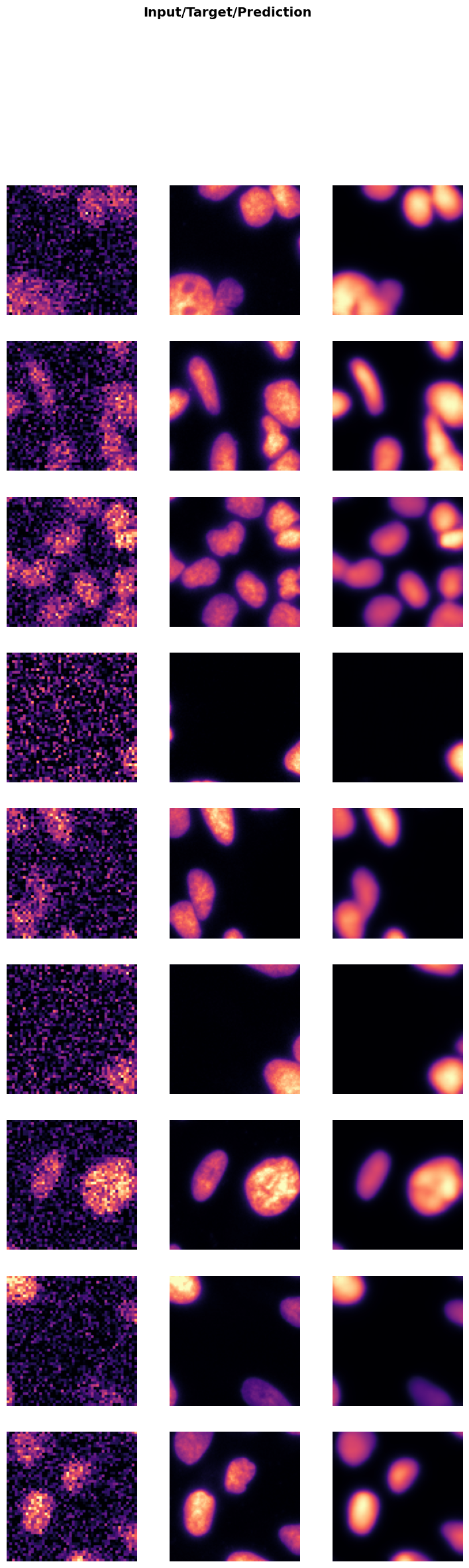

Show Results

In the next cell, we will visualize the results of the trained model on a batch of validation data. This step helps in understanding how well the model has learned to denoise the images.

trainer.show_results(cmap='magma'): This function will display a batch of images from the validation dataset along with their corresponding denoised outputs using the ‘magma’ colormap.

Visualizing the results helps in assessing the performance of the model and identifying any areas that may need further improvement.

trainer.show_results(cmap='magma')

Save the Trained Model

In the next cell, we will save the trained model to a file. This step is crucial to preserve the model’s weights and architecture, allowing you to load and use the model later without retraining it.

trainer.save('tmp-model'): This function saves the model to a file named ‘tmp-model’. You can change the filename to something more descriptive based on your project.

Suggestions for customization: - Change the filename to include details like the model architecture, dataset, or date (e.g., ‘unet_resnet34_U2OS_2023’). - Save the model in a specific directory by providing the full path (e.g., ‘models/unet_resnet34_U2OS_2023’). - Save additional information like training history, metrics, or configuration settings in a separate file for better reproducibility.

Saving the model ensures that you can easily share it with others or deploy it in a production environment without needing to retrain it.

trainer.save('tmp-model')Path('../_data/U2OS/128a57f165e1044e34d9a6ef46e66b3c-snr_7_binning_2.zip.unzip/train/low/models/tmp-model.pth')Evaluate the Model on Test Data

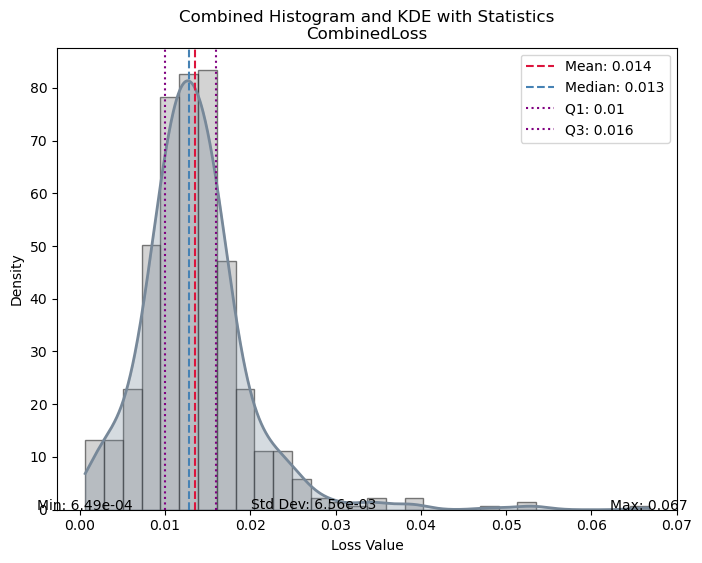

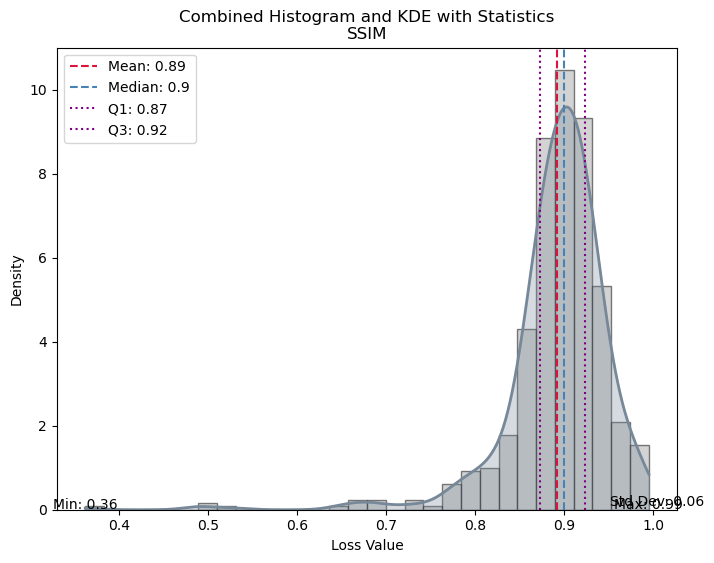

In the next cell, we will evaluate the performance of the trained model on unseen test data. This step is crucial to get an unbiased evaluation of the model’s performance and understand how well it generalizes to new data.

test_X_path: The path to the directory containing the low-resolution test images.test_data: ADataLoaderobject created from the test images.evaluate_model(trainer, test_data, metrics=SSIMMetric(2)): This function evaluates the model on the test dataset using the specified metrics (in this case, SSIM).

Suggestions for customization: - Change the

test_X_pathvariable to point to a different test dataset. - Add more metrics to themetricsparameter to get a comprehensive evaluation (e.g.,MSEMetric(),MAEMetric()). - Save the evaluation results to a file for further analysis or reporting.

Evaluating the model on test data helps in understanding its performance in real-world scenarios and identifying any areas that may need further improvement.

test_X_path = extract_directory/'test'/'low'

test_data = data.test_dl(get_image_files(test_X_path), with_labels=True)

# print length of test dataset

print('test images:', len(test_data.items))

evaluate_model(trainer, test_data, metrics=SSIMMetric(2));test images: 615

| Value | |

|---|---|

| CombinedLoss | |

| Mean | 0.013527 |

| Median | 0.012786 |

| Standard Deviation | 0.006557 |

| Min | 0.000649 |

| Max | 0.066723 |

| Q1 | 0.010045 |

| Q3 | 0.015991 |

| Value | |

|---|---|

| SSIM | |

| Mean | 0.891894 |

| Median | 0.899943 |

| Standard Deviation | 0.059861 |

| Min | 0.361847 |

| Max | 0.994855 |

| Q1 | 0.873131 |

| Q3 | 0.923070 |

Load the Model

In this step, we will load the previously trained model using the load method of the visionTrainer class. In this example, we will:

- Create a trainer instance and load the previously saved model.

- Fine tune the model a several epochs more.

- Evaluate the model with test data again.

model = create_unet_model(resnet34, 1, (128,128), True, n_in=1, cut=7)

loss = CombinedLoss(mse_weight=0.8, mae_weight=0.1)

metrics = [MSEMetric(), MAEMetric(), SSIMMetric(2)]

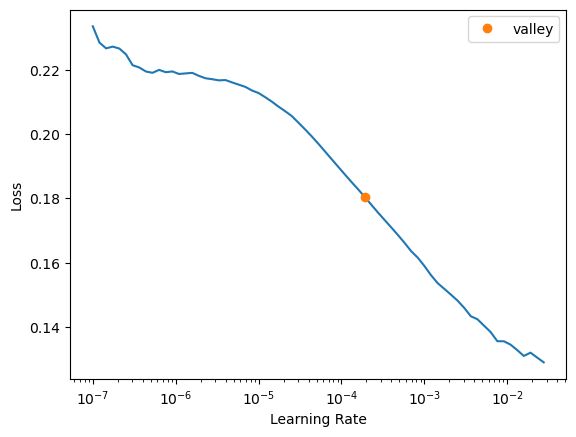

trainer2 = fastTrainer(data, model, loss_fn=loss, metrics=metrics, show_summary=False, find_lr=True)

# Load saved model

trainer2.load('tmp-model')

# Train several additional epochs

trainer2.fit_one_cycle(2, lr_max=2e-4)

# Evaluate the model on the test dataset

evaluate_model(trainer2, test_data, metrics=metrics, show_graph=False);Inferred learning rate: 0.0002| epoch | train_loss | valid_loss | MSE | MAE | SSIM | time |

|---|---|---|---|---|---|---|

| 0 | 0.011576 | 0.014184 | 0.001200 | 0.018013 | 0.885774 | 00:08 |

| 1 | 0.011833 | 0.014967 | 0.001053 | 0.017201 | 0.875956 | 00:09 |

| Value | |

|---|---|

| CombinedLoss | |

| Mean | 0.013745 |

| Median | 0.013117 |

| Standard Deviation | 0.006751 |

| Min | 0.000657 |

| Max | 0.066316 |

| Q1 | 0.010192 |

| Q3 | 0.016188 |

| Value | |

|---|---|

| MSE | |

| Mean | 0.001185 |

| Median | 0.001085 |

| Standard Deviation | 0.000799 |

| Min | 0.000002 |

| Max | 0.004928 |

| Q1 | 0.000643 |

| Q3 | 0.001567 |

| Value | |

|---|---|

| MAE | |

| Mean | 0.017776 |

| Median | 0.018082 |

| Standard Deviation | 0.008009 |

| Min | 0.001321 |

| Max | 0.044682 |

| Q1 | 0.012950 |

| Q3 | 0.022790 |

| Value | |

|---|---|

| SSIM | |

| Mean | 0.889803 |

| Median | 0.898358 |

| Standard Deviation | 0.062184 |

| Min | 0.365174 |

| Max | 0.994775 |

| Q1 | 0.870843 |

| Q3 | 0.921133 |